One does not simply ChatGPT a contract

Very little is understood about how #ChatGPT and Large language Models could work, but that has not stopped others from having an opinion about it.

Without warning, ChatGPT garnered 100 million users in 2 months. It recently launched a premium service, which doesn’t offer much other than ensuring you can access the service any time you want. It appears that #OpenAI was not expecting its ChatGPT to be so successful:

Before ChatGPT’s launch, some OpenAI employees were skeptical that the project would succeed. An A.I. chatbot that Meta had released months earlier, BlenderBot, had flopped, and another Meta A.I. project, Galactica, was pulled down after just three days. Some employees, desensitized by daily exposure to state-of-the-art A.I. systems, thought that a chatbot built on a two-year-old A.I. model might seem boring.

So what made ChatGPT so successful?

I have been thinking very hard about this question. Is it because ChatGPT opened up the “dark arts” of prompt engineering to the broader public? Knowing you can command a language model using your words is intriguing. However, you can already do this with GPT-3, so it’s not earth-shattering.

My current conclusion is that ChatGPT’s success may be due to another “dark” and often overlooked art — MLOps. When deploying a machine learning service, how do you balance response times, ability, power, and costs? Can you always ensure the continuous availability of your service while iterating and developing it? It seems ChatGPT found a very sweet spot. I am afraid the answer to those questions might be too geeky for most people.

A tweet I found inadvertently touched on those topics:

Do we still need Law as Code / Data in the age of LLM and ChatGPT? Why bother if ChatGPT can somehow reason on the legaleese directly, and will get better with time? I think we do.🧵

— Pierre-Loic Doulcet (@hexapode) February 16, 2023

The idea of passing a 400,000-word insurance contract to ChatGPT (or GPT-3, for that matter) and expecting something useful to happen is borderline absurd. As the tweet explains, it’s expensive, slow and not valuable. A large language model, even accompanied by a superb agent like ChatGPT, is no genie in a lamp.

You would probably like to split the contract into meaningful pieces (which aren’t necessarily clauses), convert them into a code or data which the language model and then run it through the model. In a product like a chatbot, you might need to classify a request to select the correct response or perform reinforcement learning to improve it. These actions probably require you to call multiple models or resources for a single request.

Simply stated, one does not simply ChatGPT a contract.

Experiments go awry

I learned the importance of MLOps while experimenting with augmenting GPT-3 with my data. The premise is straightforward. GPT-3 knows a lot about documents, so by directing its attention to a particular set of documents, it can focus its power on that.

Technically, this is how it goes:

- Convert a set of documents into vectors and store them in a vector database.

- Convert the request you receive into a vector and find the four most relevant documents in your database.

- Include these documents in a prompt, then ask GPT3 to write a response with those documents as context.

Can a directed search beat ChatGPT? The preliminary results of my experiment suggest that it can.

I wanted to improve my results. In a nutshell, my plan would improve “context”. I would break down the documents into smaller pieces and write a summary using GPT-3 instead of relying on the summary provided by the author (which aims to garner more views rather than being informative). I expected GPT-3 to be able to reason better when it knows more.

I didn’t get the result I wanted.

Since I made GPT3 summarize the article, I made several calls to the OpenAI API. Each call, as you know, takes a few seconds to travel to OpenAI and back. This increased the time taken to prepare the database by hours.

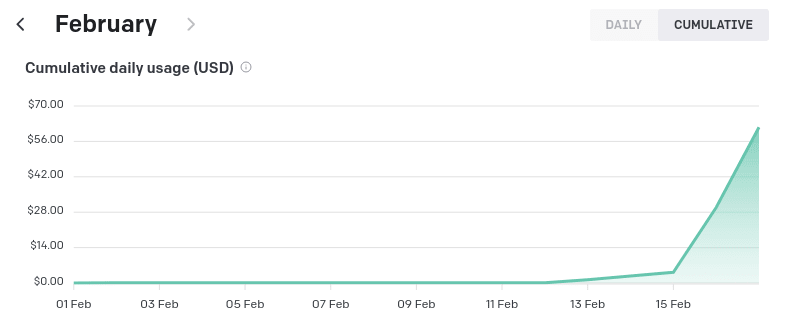

Of course, my OpenAI costs went through the roof.

Finally, it is debatable whether the results were improved.

| Question | Should I use the DBS Woman’s World Card or Citi Rewards Card for online spending? |

|---|---|

| ChatGPT | Both the DBS Woman’s World Card and the Citi Rewards Card have rewards programs that can be beneficial for online spending. However, the best card for you will depend on your specific spending habits and preferences. The DBS Woman’s World Card gives you 6X rewards on online fashion and beauty, 4X rewards on online travel bookings and 2X rewards on online shopping and everyday spends. The Citi Rewards Card gives you 10X rewards points on online shopping and dining. You should consider your spending habits and which category you spend more on, then compare the rewards, benefits, and fees of each card to determine which one will give you the most value. It’s also worth considering any promotions or bonus rewards that the card issuer may be offering. |

| Milelion comments on ChatGPT | Swing and a miss for ChatGPT here. It spouts gibberish about the DBS Woman’s World Card’s 2X, 4X and 6X rewards, when it’s as simple as 10X rewards for all online spending that’s not on the general exclusions list. Likewise, it limits the 10X rewards points on the Citi Rewards Card to online shopping and dining, when it should be all online transactions except travel and mobile wallet. I would have loved to see ChatGPT bring in other factors like points expiry, number of transfer partners, and points pooling when comparing these two cards. |

| Milerobo v1 | The DBS Woman’s World Card is an excellent option for online spending, and an easy way to generate up to 96,000 miles a year with just S$2,000 of expenditure per month. It earns 4 mpd on all online spending, with a relatively high 4 mpd cap of S$2,000 per month. The Citi Rewards Card also earns 4 mpd on online spending, but it has a lower cap of S$1,000 per month. The Citi Rewards Card has the advantage of having a longer points validity period (one year for DBS Points on the Woman’s World Card versus up to five years for Citi Rewards). |

| Milerobo v2 | It depends on your individual needs and preferences. The DBS Woman's World Card offers 4 mpd on all online spending, capped at S$2,000 per month, and points are valid for one year. The Citi Rewards Card offers 4 mpd on online spending, with a longer points expiry period of up to five years, but the points are not pooled with other DBS cards. |

Machine learning is a dark art.

More considered responses to ChatGPT, please

Having played around with GPT-3, I am more aware of the limitations and possibilities of large language models with the law. In many pieces of popular commentary on the subject, though, I don’t find any nuance in talking about such technology.

From now on, whenever I read a commentary about ChatGPT or GPT-3, I will replace those words with “thingamajiggy” and figure out whether the author knows what he’s talking about or it’s just a thing which sprouted out from their imagination.

Talking about Artificial Intelligence, ChatGPT or GPT-3 from what you suspect it to be rather than reality does a big disservice to your readers. For one, it’s a strawman. Once you establish that the current state of technology does not do what you imagine it to do, it immediately becomes an argument that disses the entire technology. That’s a siren song for lawyers who fear ChatGPT will come for their jobs. The possibilities of such technologies are lost.

In the meantime, I’ll follow those whose opinions are worth listening to. I’ll also continue experimenting and parley them into skills and business ideas.

How are you dealing with the hype surrounding ChatGPT these days?

- Discuss... this Post

- If you found this post useful, or like my work, a tip is always appreciated:

- Follow this blog on the Fediverse [Enter the blog's address in Mastodon's search accounts function]

- Contact me: