What's up with the upcoming AI apocalypse?

The angst about #AI apocalypse shouldn't be about the Cthulu apocalypse. At least, it should be something that works.

Fresh from learning what happens if you use #ChatGPT (wrongly) in court, it seems that not a day goes by without some dire warning about large language models and AI.

Compared to the other statement led by Elon Musk, this statement was signed by a broad cross-section of industry leaders from the likes of #OpenAI, Google and Anthropic. Is there an industry consensus that AI is so dangerous that it needs to be managed in the same way as nuclear weapons?

A closer examination of the statement they signed is revealing. It is only 22 words long – “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.” Notably, it doesn't say how or why AI leads to the extinction of the human race. All it suggests is that we should worry about it because we can associate AI with real apocalypses.

I am sceptical that Large Language Models or Generative AI will have this effect. We've been here before: cryptocurrency was supposed to kill the banking industry, and the metaverse will completely change how we relate to life forever.

I am sceptical because the work required to apply such models to practice is hard. So what if computers understood a lot more about text than they used to in the past? So what if computers can generate contract clauses? Are they the clauses the user wants? Are they the clauses which anyone wants? Once you start zeroing in on the problem you want to solve, suddenly, it can't be solved by a single prompt. You're going to need expertise. Worse still, you might even need industry consensus (Gasp!).

The makers of spaCy suggest that using LLMs as the sole solution to real problems is probably impractical and worse.

The most ironic discovery I've had so far in my LLM adventure is that when computers can finally generate fine text, “real” data still remains the most critical asset. Simply stated, garbage in, garbage out. Whatever the model doesn't know, it doesn't know.

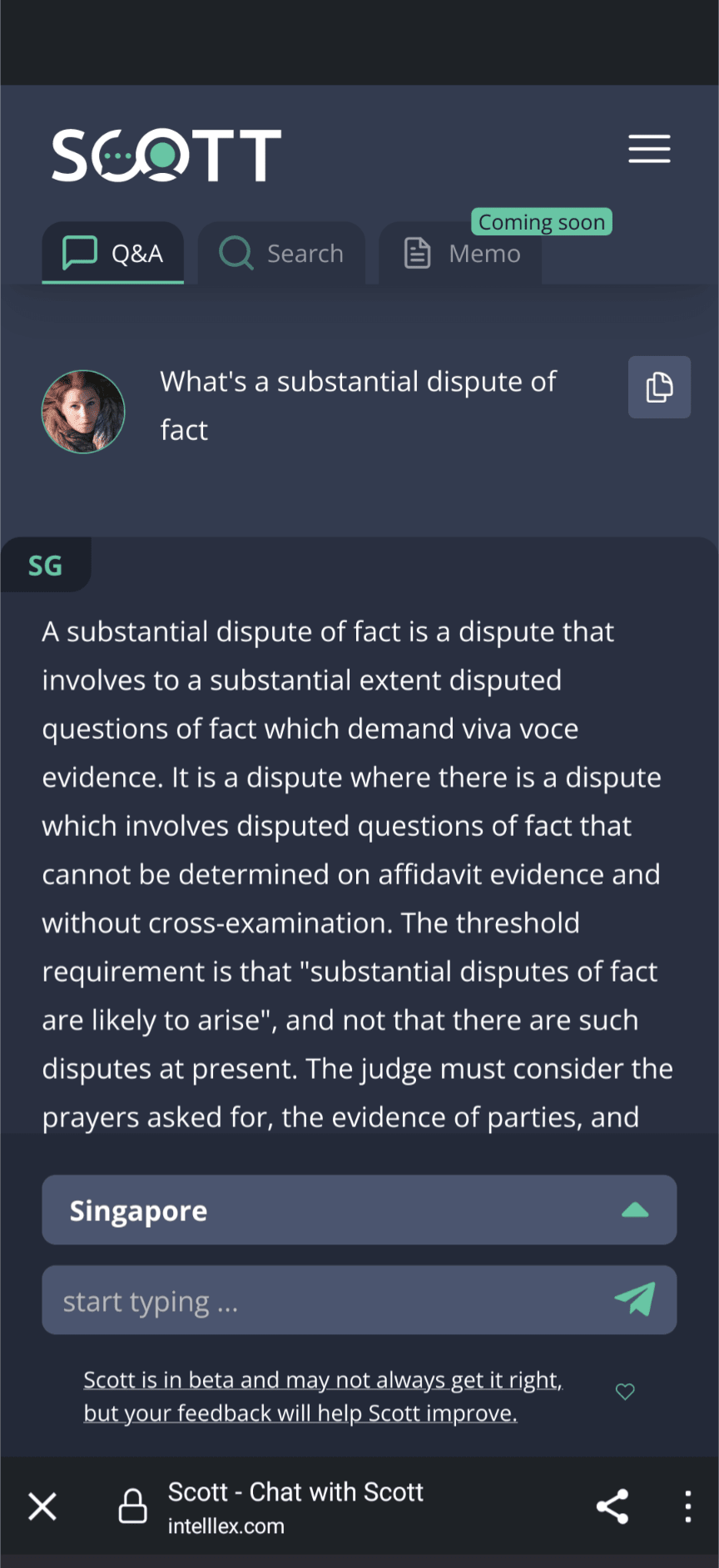

Originally, I was curious about applying ChatGPT to Singapore Law data: Augmenting ChatGPT with Your Data Can Improve Its Performance. I am pleasantly glad that someone else took it all the way with a chatbot named Scott. From what I can tell, it does broadly the same thing as my experiment. Pick three paragraphs from Singapore Supreme Court judgements which are closest to my query, and then generate a text based on my prompt with that context.

The results were pretty fascinating, in my opinion:

However, it performed poorly on tedious questions about litigation, for example, on security for costs or resisting amendments to pleadings.

I don't blame Scott. The data was based on judgements published by the courts. It's biased for grand questions of great legal import (that's what judges who publish opinions care about, of course). However, the audience for that kind of assistance is going to be narrow. Contrary to popular assumptions, AI is not going to replace the most boring work that associates do on a day-to-day basis.

If you decided that the solution to this problem is to get more data, then where are you going to get it? For my example of security for costs and the amendment to pleadings, you'd have to sit in on the hearings of the registrar and other deputy judges deciding these questions every day. The reasons for the orders are usually not detailed, let alone written. They are inaccessible to a large language model.

If you decide that your solution will ignore these unglamorous questions, how would you stop nosy parkers (read: me) from trying to uncover its limit? You will have to spend time moderating responses (an equally unglamorous activity) and hope they will be accurate, or else your solution will be cited in court.

The adage that “all models are wrong, but some are more useful than others” rings true here.

Don't get me wrong. There are real dangers in AI, but probably not the Cthulhu apocalypse. There are also good solutions to these dangers (I am particularly interested in ensuring that responses from LLMs can be traced back to the person or company who created the model), but I don't think they can be realistically implemented.

The real issue is whether AI would be useful, and that depends on the thousands of people like you and me, frantically trying to make it work. If it turns out that generative AI is too unreliable, too expensive or too dangerous, it would go the way of the several topics the hype beast churns out in a regular issue. At present, it's too early to tell.

So what are you building with generative AI today?

- Discuss... this Post

- If you found this post useful, or like my work, a tip is always appreciated:

- Follow this blog on the Fediverse [Enter the blog's address in Mastodon's search accounts function]

- Contact me: